Warren Buffett’s prediction that AI scams are set to become the next major “growth industry” serves as yet another indicator of the transformative shift AI is bringing to cybercrime.

The days of easily identifiable phishing scams, typified by poorly worded emails or typo errors, are long gone. Today, AI can mass-produce hyper-personalized scams that include personal information and are crafted with a level of sophistication that makes them indistinguishable from genuine communications.

The Rise of Hyper-Targeted Scam Attacks

Generative AI enables criminals to automatically scrutinize an individual’s profile, pinpoint specific vulnerable details, and craft highly targeted scams. These attacks, promoted by dark web services such as FraudGPT and WormGPT, meticulously incorporate aspects of the victim’s work, relationships, hobbies, and family life, using actual names, websites, bank account details, and more. The specificity and relevance of these scams make them appear incredibly legitimate, which often lowers the victim’s guard and increases the effectiveness of the fraud.

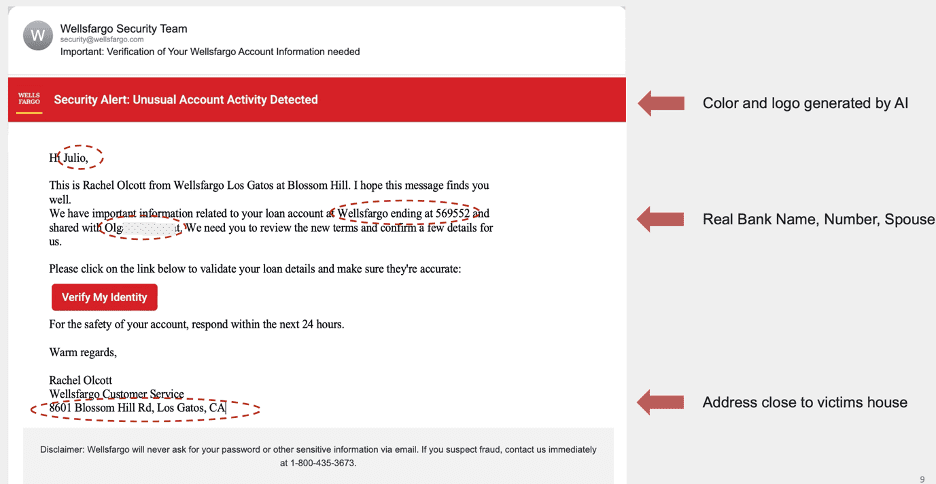

Imagine receiving an email that appears to be from the bank branch in your town, referencing specific recent transactions and using your and your spouse’s full name. This scenario, once the hallmark of a well-crafted phishing attempt, is now commonplace due to AI’s ability to synthesize detailed information and craft convincing narratives. Each piece of compromised information is a vulnerability exploited by these scams, eroding traditional defenses and necessitating innovative countermeasures.

ScamGPT: Simulating Fraudster’s AI Scams

There is an urgent need to develop robust human defenses capable of detecting and mitigating AI-driven scams effectively to combat these evolving threats. Educational initiatives must highlight the potential consequences of compromised information and the new ways criminals can exploit it to orchestrate seamless scam narratives.

Constella’s all-new ScamGPT solution generates AI scams like fraudsters do to educate and build human defenses so users are trained to detect real scam attacks when they come in the future.

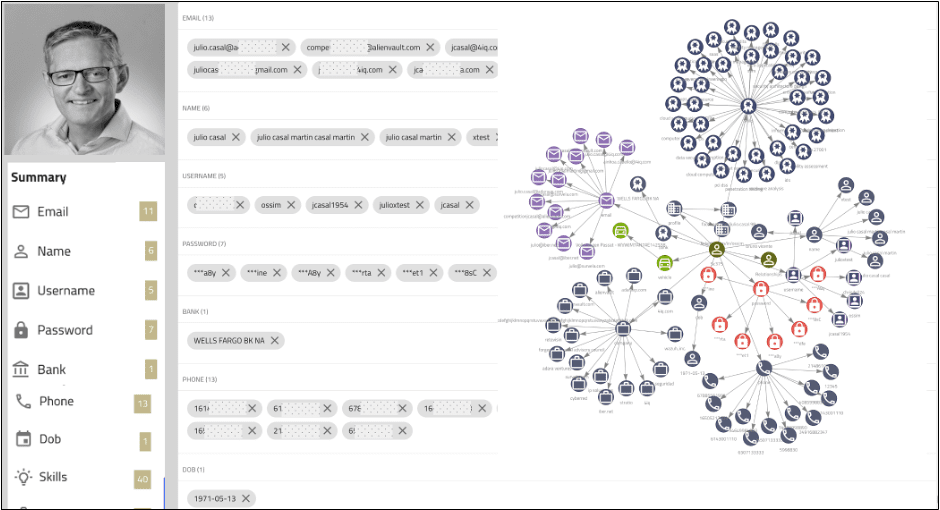

Automatic Profiling of the Victim: Surface of Attack

Powered by the world’s largest data lake comprising over one trillion identity assets, paired with the company’s proprietary AI profiling engine, ScamGPT gathers information about the victim using ID Resolution.

Generative AI Scams

Now that the system knows the victim in depth (emails, services used, locations, skills, relationships), Constella’s trained ScamGPT generative AI model generates scams that include real and very specific information to lower the victim’s defenses.

In the following example, ScamGPT uses the information gathered to identify that the target’s bank is Wells Fargo, and so automatically generates a Wells Fargo email with appropriate branding, and information pertinent to the target, including the bank number, spouse name, and address for the nearby branch office.

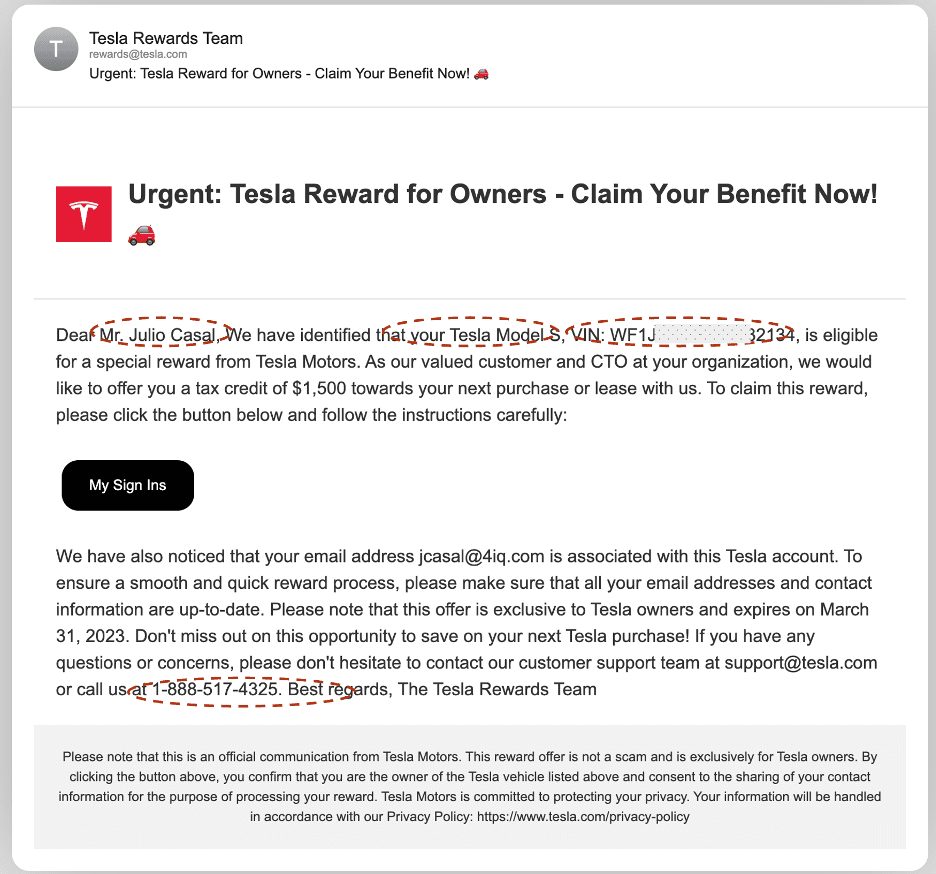

In another example, it identifies the model of the target’s car and even the VIN number to create the following email:

In another startling and scary example, ScamGPT impersonates a LinkedIn data identification representative, utilizing genuine user information and harnessing the full conversational capabilities of Generative AI to respond to the user’s inquiries.

The example simulates a scam attempt from an AI-generated female agent using compromised data to win the users trust.

Conclusion

As AI continues to evolve, so too will its application in both legitimate and criminal activities. The escalation of AI-driven scams underscores the imperative of building robust user and employee defenses. This can only be done through awareness and training to create a human firewall that is able to recognize a voice call attack where no software can protect users and employees.

By understanding the capabilities and risks associated with AI in cybercrime, organizations and individuals can better prepare themselves to navigate this complex and rapidly evolving digital landscape.